When it comes to concurrency, many programming languages adopt the Shared Memory/State Model. However, Go distinguishes itself by implementing Communicating Sequential Processes (CSP). In CSP, a program consists of parallel processes that do not share state; instead, they communicate and synchronize their actions using channels. Therefore, for developers interested in adopting Go, it becomes crucial to comprehend the workings of channels. In this article, I will illustrate channels using the delightful analogy of Gophers running their imaginary cafe, as I firmly believe that humans are better visual learners.

Scenario

Partier, Candier, and Stringer are running a cafe. Given that making coffee takes more time than accepting orders, Partier will assist with accepting orders from customers and then pass those orders to the kitchen, where Candier and Stringer prepare the coffee.

Gopher’s Cafe

Unbuffered Channels

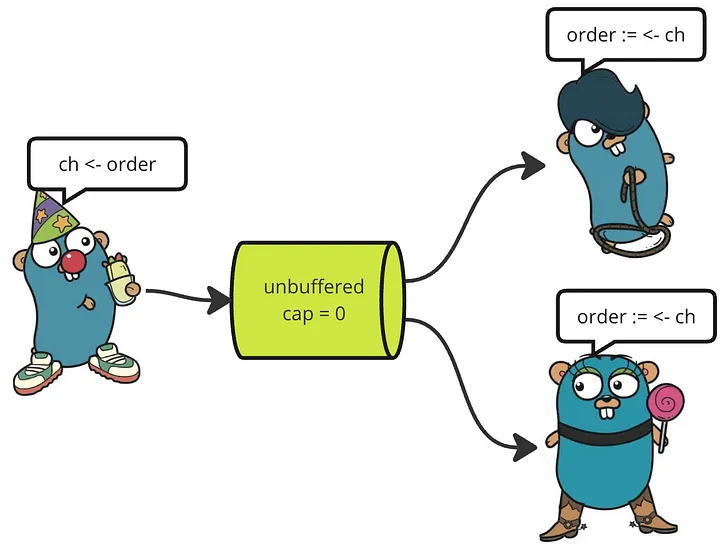

Initially, the cafe operates in the simplest manner: Whenever a new order is received, Partier puts the order in the Channel and waits until either Candier or Stringer takes it before accepting any new orders. This communication between Partier and the kitchen is achieved using an unbuffered channel, created with ch := make(chan Order). When there are no pending orders in the channel, even if both Stringer and Candier are ready to take new orders, they remain idle and wait for a new order to arrive.

Unbuffered channel

When a new order is received, Partier places it into the channel, making the order available to be taken by either Candier or Stringer. However, before proceeding with accepting new orders, Partier must wait until one of them retrieves the order from the channel.

As both Stringer and Candier are available to take the new order, it will be immediately taken by either one of them. However, the specific recipient who gets the order cannot be guaranteed or predicted. The selection between Stringer and Candier is non-deterministic, and it depends on factors such as scheduling and the internal mechanics of the Go runtime. Assuming that Candier gets this first order.

After Candier completes processing the first order, she returns to the waiting state. If no new orders arrive, both workers, Candier and Stringer, remain idle until Partier places another order into the channel for them to process.

When a new order arrives and both Stringer and Candier are available to process it. Even when Candier just processed the previous order, the specific worker who receives the new order remains non-deterministic. In this scenario, let’s say Candier gets assigned this second order again.

Distributing messages in channel is non-deterministic

A new order, order3, arrives, Candier is currently occupied with processing order2, she is not waiting at the line order := <-ch, Stringer becomes the only available worker to receive order3. Therefore, he will get it.

Immediately after order3 is sent to Stringer, order4 arrives. However, at this point, both Stringer and Candier are already occupied with processing their respective orders, leaving no one available to take order4. Since the queue is not buffered, Partier has to wait until either Stringer or Candier becomes available to take order4 before accepting any new orders.

Buffered Channels

Unbuffered channel works, however, it limits the overall throughput. It would be better if they just accept a number of orders to process them sequentially in the backend (the kitchen). That is achievable with a buffered channel. Now, even if Stringer and Candier are occupied with processing their orders, Partier can still leave new orders in the channel and continue accepting additional ones as long as the channel is not full, e.g. up to 3 pending orders.

By introducing a buffered channel, the cafe enhances its ability to handle a higher number of orders. However, it is crucial to carefully choose an appropriate buffer size to maintain reasonable waiting times for customers. After all, no customer wants to endure excessively long waits. Sometimes, it might be more acceptable to reject new orders than to accept them and be unable to fulfill them in a timely manner. Moreover, it is crucial to exercise caution when employing buffered channels in ephemeral containerized (Docker) applications as random restarting is expected, recovering messages from the channel under such circumstances, can be a challenging task and even border on the impossible.

Channels vs Blocking Queues

Despite being fundamentally different, Blocking Queue in Java is used to communicate between Threads while Channel in Go is for Goroutine’s communication, BlockingQueue and Channel behave somewhat similar to one another. If you are familiar with BlockingQueue, understanding Channel will definitely be easy.

Common usages

Channels are a fundamental and widely-used feature in Go applications, serving various purposes. Some common use cases of channels include:

- Goroutine Communication: Channels enable message exchange between different goroutines, allowing them to collaborate without the need to share states directly.

- Worker Pools: As seen in the example above, channels are often employed to manage worker pools, where multiple identical workers process incoming tasks from a shared channel.

- Fan-out, Fan-in: Channels facilitate the fan-out, fan-in pattern, where multiple goroutines (fan-out) perform work and send results to a single channel, while another goroutine (fan-in) consumes these results.

- Timeouts and Deadlines: Channels, in combination with the select statement can be utilised to handle timeouts and deadlines, ensuring that programs can gracefully handle delays and avoid indefinite waits.

I will delve into different usages of channels in more detail in other articles. However, for now, let’s conclude this introductory blog by implementing the above-mentioned cafe scenario and witnessing how channels work in action. We will explore the interactions between Partier, Candier, and Stringer, and observe how channels facilitate smooth communication and coordination among them, enabling efficient order processing and synchronization in the cafe.

Show me your code!

package main

import (

"fmt"

"log"

"math/rand"

"sync"

"time"

)

func main() {

ch := make(chan order, 3)

wg := &sync.WaitGroup{} // More on WaitGroup another day

wg.Add(2)

go func() {

defer wg.Done()

worker("Candier", ch)

}()

go func() {

defer wg.Done()

worker("Stringer", ch)

}()

for i := 0; i < 10; i++ {

waitForOrders()

o := order(i)

log.Printf("Partier: I %v, I will pass it to the channel\n", o)

ch <- o

}

log.Println("No more orders, closing the channel to signify workers to stop")

close(ch)

log.Println("Wait for workers to gracefully stop")

wg.Wait()

log.Println("All done")

}

func waitForOrders() {

processingTime := time.Duration(rand.Intn(2)) * time.Second

time.Sleep(processingTime)

}

func worker(name string, ch <-chan order) {

for o := range ch {

log.Printf("%s: I got %v, I will process it\n", name, o)

processOrder(o)

log.Printf("%s: I completed %v, I'm ready to take a new order\n", name, o)

}

log.Printf("%s: I'm done\n", name)

}

func processOrder(_ order) {

processingTime := time.Duration(2+rand.Intn(2)) * time.Second

time.Sleep(processingTime)

}

type order int

func (o order) String() string {

return fmt.Sprintf("order-%02d", o)

}

You can copy this code, tweak it and run it on your IDE to better understand how channel works.

If you find this article helpful, please motivate me with one clap. You can also checkout my other articles at https://medium.com/@briannqc and connect to me on LinkedIn. Thanks a lot for reading!